Feb 15, 2022

Note: All investigation information courtesy of BFU Investigation Report, AX001-1-2/02, May 2004. All Human Factors-related information is found in section 2.6 Human Factors.

When an accident happens, we are always quick to blame the human. It seems that the phrase 'human error' is the cause for everything that goes wrong, a sort of Catch-22 that places the blame solely on the operator. However, an important concept that I've learned in my human factors classes is that we should shift our focus away from the human and toward the system. If the system isn't designed with the capacities and limitations of the desired operators in mind, how can we expect operators to perform accurately, successfully, and safely? And if the situation is novel or the system is changed or rearranged in some way, how can we expect operators to react correctly without proper training? We understand that as experience increases, the propensity to commit errors decreases. But if you lose situation awareness, or are simply following the mental models you have been conditioned to use, how do you (and, more to the point, how should you) react? And what about technology? How should we use automation? Should we trust it? Who should we trust more: the man or the machine? One such accident that exemplifies each of the above concerns is the 2002 Überlingen mid-air collision. In this article, I provide factual information about the crash, analyze the human factors as contributing causal factors, explore the importance of organizational and managerial factors, and discuss why this tragedy fascinates me.

On the night of July 1, 2002, Bashkirian Airlines Flight 2937 (a Tupolev Tu-154M owned by the Russian charter company) and DHL Flight 611 (a Boeing 757-23APF) collided in midair over the southern German town of Überlingen, near the Swiss border. All of the passengers and crew on board both planes were killed, resulting in 71 total deaths. On February 24, 2004, a year and a half after the crash, Peter Nielsen, the Air Traffic Control Officer (ATCO) on duty at the time of the collision, was murdered by Vitaly Kaloyev, a Russian widow whose wife and two children had died in the accident.

Flight 2937 was a charter flight, carrying Russian schoolchildren on a school trip from Moscow to Barcelona, Spain, organized by the local UNESCO committee. Their original flight was canceled, leaving Flight 2937, piloted by five Russian crewmembers, as the only plane ready to transport them. Flight 611 was a regularly scheduled cargo flight from Bergamo, Italy, to Brussels, Belgium. The plane was flown by a British captain and a Canadian first officer, both based in Bahrain. It should be noted that both aircraft's flight plans intersected over southern Germany.

Around 23:30 local time, both aircraft were inside German airspace and were both flying at flight level 360. They were approaching each other from opposite headings, on an apparent collision course. Despite both planes flying within Germany, the airspace was controlled by Skyguide, a private Swiss airspace control company based in Zürich. Peter Nielsen was the only controller handling the airspace at the time of the accident; his colleague, who was also responsible for the same airspace, had taken an extended break, which was common practice at Skyguide. Nielsen's other colleague was controlling a different sector. Additionally, maintenance on Nielsen's radar display was scheduled to occur that night, which decommissioned the phone system and turned off the collision warning system within the radar system. Minutes before the accident, Nielsen's attention was diverted to two other aircraft: one landing at a nearby airport, and the other passing through the airspace. As he was communicating with both planes, 2937 and 611 were rapidly closing in on each other. Nielsen noticed the imminent collision and instructed Flight 2937 to descend to FL 350. Seconds after this instruction, the Tupolev's Traffic Alert and Collision Avoidance System (TCAS) instructed the pilots to climb. Flight 611's TCAS simultaneously instructed the crew to descend. The Russian crew, confused by which instruction to follow, began a rapid descent, as did Flight 611. At 23:35:32 local time, the 757's vertical stabilizer sliced completely through the Tu-154's fuselage. Both aircraft fell to the ground, painting the landscape of southern Germany with debris and dead bodies.

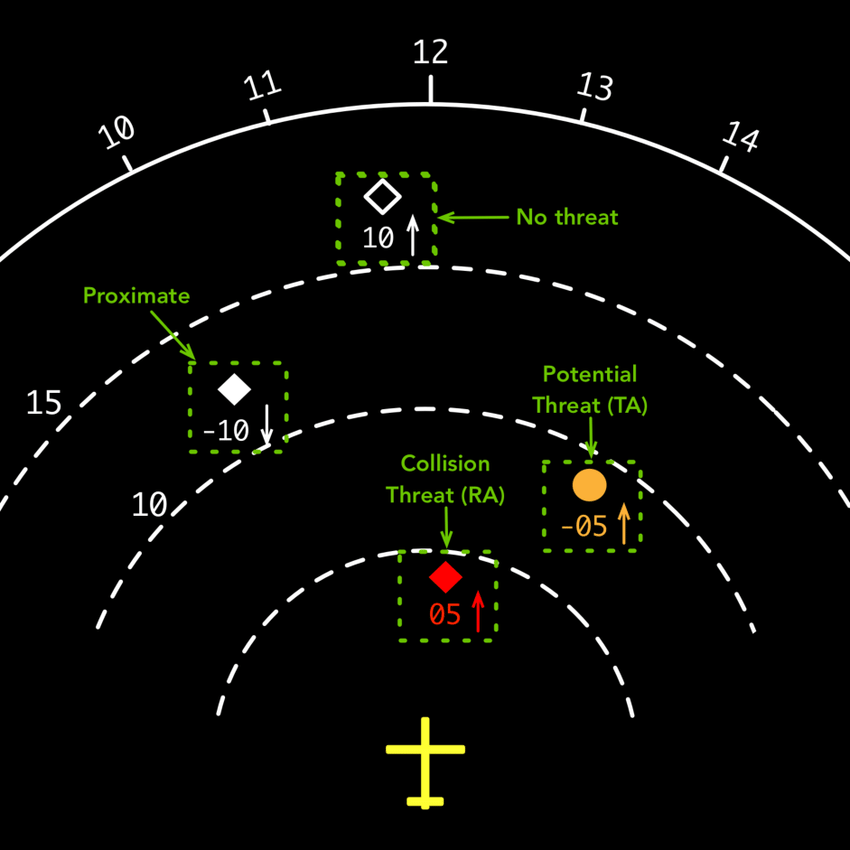

The final Investigation Report, as published by the BFU (Germany's Federal Bureau of Aircraft Accidents Investigation), identifies a multitude of human factors issues that were attributed as causal factors to the accident's outcome. Focusing first on both flight crews, the BFU recognizes that although all pilots held a level of situation awareness (SA) that would not otherwise indicate inattention or loss of awareness before the collision, Flight 2937's pilots received two contradictory messages from the controller and the TCAS at almost the same time. Both crews were made aware of the other aircraft's presence one minute before colliding through their respective TCAS displays (example shown below); however, the contradictory instructions stymied the Russian pilots' mental models of what to do in a TCAS alert situation, which led to confusion, and ultimately a loss of SA seconds before the accident. Had the Russian pilots followed the TCAS command, as they were trained (as provided by Eurocontrol on behalf of the Russian Regulatory Authority), the collision would have been avoided.

The investigation places a majority of the blame on the human factors concerning Skyguide area control, specifically management, task management and distribution, team management, and work environment (as per Sections 2.6.1.1 - 2.6.1.5). Peter Nielsen was also blamed, but to a lesser extent due to Skyguide management and company practices that all employees followed. The BFU notes that ATCOs taking extended breaks when the sector is less busy should have been prohibited. They are at the workplace to work, not to take longer breaks. Furthermore, the scheduled maintenance of the radar displays made it more difficult for Nielsen to recognize and alert others of an impending collision, for not only did the system decommission the phone system and collision warning system, but it also made radar returns appear more slowly on the display. The technicians did not warn Nielsen of these issues, nor was he previously aware of the consequences to his systems under maintenance. Finally, the introduction of the other two aircraft during a critical phase where the ATCO could have identified 2937's and 611's collision course sewed the seeds of disaster. With an improperly-working radar display, a colleague who was not present to relieve Nielsen of the mounting task load, and the two periphery planes demanding a significant portion of Nielsen's SA and attention, it was almost impossible for there not to be an accident of some kind.

This accident has always fascinated me. I believe the crash and the events leading up to it can be described by the Swiss cheese model, as proposed by Reason (1990). Although it can be argued that every accident can be described using Reason's model, I think this particular accident is a prime example of how it works (i.e., the factors that could have gone wrong did go wrong, all at the perfect time). If even one error had not occurred (such as the Russian pilots following TCAS instead of the ATCO), the situation could have been prevented altogether or at least reduced in severity.

I think the accident also brings into question industrial-organizational human factors. In America, air traffic control is heavily regulated, almost to the point where you think there's a never-ending list of requirements and rules to follow. Since Skyguide is a private company, I question their management, training, and work ethic policies. The BFU report discusses these policies and risk factors, but such is too lengthy to include here. In human factors, we have come to know that training provided by the company, as well as the management themselves, carries significant weight in terms of operator performance, specifically in high-workload environments.

In addition, I argue that principles from social psychology are also contributing causal factors. The accident occurred just over a decade after the collapse of the Soviet Union. Under the union, Russians were conditioned to obey the human with power without question. This contradicts most western cultures, wherein people are taught to question their concerns and discuss them with their peers and superiors. Therefore, it can be argued that the Russian pilots followed Nielsen's command to descend because it matched their pre-disposition and mental model to always obey the human with more perceived power and/or knowledge than themselves. A few weeks ago, I had the chance to talk to a graduate student in the Aviation Safety Program at Embry-Riddle. Her areas of research include the language interactions between pilots, ATCOs, and instructors, and their effects on safety within aviation. It is interesting to see how this accident is an example of how language (specifically vocal intonations and intentions) directly affects both individual and team decision-making.

Along with the previous psychological interpretation, we can also point to trust in automation as a human factors issue. Because TCAS technology was fairly new in 2002, experts questioned whether the Russian pilots trusted the automation in their aircraft. The answer is a resounding no. If they had, the collision would have been avoided. One explanation could be voice intonation and a perceived sense of urgency. Nielsen's voice most likely sounded very alarmed, which perhaps translated to urgency and panic through the Russian crew's interpretations, whereas the TCAS computer voice sounded robotic and monotonous, and therefore not able to convey the sense of urgency required for the crew to obey the instruction. The automation issue, however, is one of a larger conversation. TCAS was implemented to avoid human error. Yet humans are in charge of designing, manufacturing, implementing, and maintaining automation. In every stage of this process, human errors occur. One factor engineers did not account for is the impact of the TCAS computer voice on decision-making.

Hindsight bias tells us that taking into account the tone of voice and its relation to psychological processes is common sense. In fact, this bias tells us that all of the issues and the solutions just discussed are common sense. But just like some people insisted that the L.A. Rams were going to win the Superbowl even before the game started, one cannot know exactly how all of the small events and errors that occur during the event will effectuate the result.

All of these factors combined culminated in an event immediately claiming 71 lives, and claiming another life in the aftermath and fallout. To read about the murder of Peter Nielsen, the on-duty ATCO responsible for the collision, click here.

I strongly believe that the investigation's findings, along with more stringent regulations and training methodologies, will continue to successfully prevent a repeat of this accident and promote safer skies.

2002 Überlingen mid-air collision (2022, February 14). In Wikipedia. https://en.wikipedia.org/wiki/2002_%C3%9Cberlingen_mid-air_collision.

German Federal Bureau of Aircraft Accident Investigation. (2004). Investigation Report AX001-1-2/02. https://reports.aviation-safety.net/2002/20020701-1_B752_A9C-DHL_T154_RA-85816.pdf

Reason, J. (1990). Human error. Cambridge University Press.

Wonder. (2021, May 6). The mid-air collision of flight 2937 and flight 611 | Mayday S2 EP4 | Wonder [Video]. Youtube. https://www.youtube.com/watch?v=iLWxy-SQ6hY

First published on LinkedIn on February 15, 2022.